One of the problems that has been attracting attention as the social implementation of AI progresses is “discrimination by AI,” in which AI makes discriminatory decisions.

We cannot deny the possibility that AI will continue to unintentionally discriminate, such as by reading and encouraging latent discriminatory tendencies in humans through the huge amount of data it learns. In order to create a fair AI system, it is also important to accumulate discussions and sometimes restrict with rules.

In response to these issues, the Ethics Committee of the Japanese Society for Artificial Intelligence released the ” Statement on Machine Learning and Fairness ” on December 10, 2019, and on January 9, 2020, the theme was “Machine Learning and Fairness”. There are also movements such as holding a symposium based on

Based on the above trends, this time we will explain examples of “discrimination by AI”, its causes, and efforts around the world. At the same time, we will also propose measures to be taken against “discrimination by AI” and the attitude necessary to spread “trustworthy AI”.

Contents

- Six cases of “discrimination by AI”

- 1. Racism by Recidivism Prediction System

- 2. Gender discrimination in the hiring system

- 3. discrimination in advertising

- Four. Discrimination in Pricing

- Five. Racism in image search

- 6. Gender discrimination in translation tools

- Six causes of “discrimination by AI”

- 1. Improper definition of target variable

- 2. Improper labeling of training data

- 3. Discrimination inherent in training data

- Four. Discrimination mixed in when selecting feature values

- Five. Discriminatory Substitution of Features

- 6. deliberate discrimination

- Initiatives around the world

- AI Now Institute

- Montreal AI Ethics Institute

- Guidance issued by the European Commission

- 1. Development of guidelines

- 2. Establishment of inspection system

- 3. Dissemination of AI ethics education

- Attitude toward the spread of “trustworthy AI”

Six cases of “discrimination by AI”

Many cases of “discrimination by AI” have already been reported, but Professor Frederik Zuiderveen Borgesius of the Faculty of Law, University of Amsterdam, the Netherlands, submitted a report to the European Commission in 2018 entitled “Discrimination, Artificial Intelligence, and Algorithmic Intention. ”

1. Racism by Recidivism Prediction System

In May 2016, the investigative news media ProPublica published an article titled ” Machine Bias “, which accused the recidivism prediction system “COMPAS”, which was used in the United States, of making racist predictions. bottom. Specifically, the recidivism rate for blacks was predicted to be twice as high as for whites, even though race was not used as a label in the input data.

2. Gender discrimination in the hiring system

An article published by Reuters in October 2018, “ Amazon discontinues use of top-secret AI recruitment tool that showed bias toward women, ” acknowledges that the recruitment system developed by Amazon tends to value men more highly than women. It was reported that the use was discontinued due to This case is also covered in detail in the blog post ” Is AI Sexist? Two causes have been pointed out. The first reason is that a high proportion of female students major in the humanities, and the second reason is that there are few women graduating from computer science departments. For these two reasons, it is thought that the probability of choosing a woman has decreased.

3. discrimination in advertising

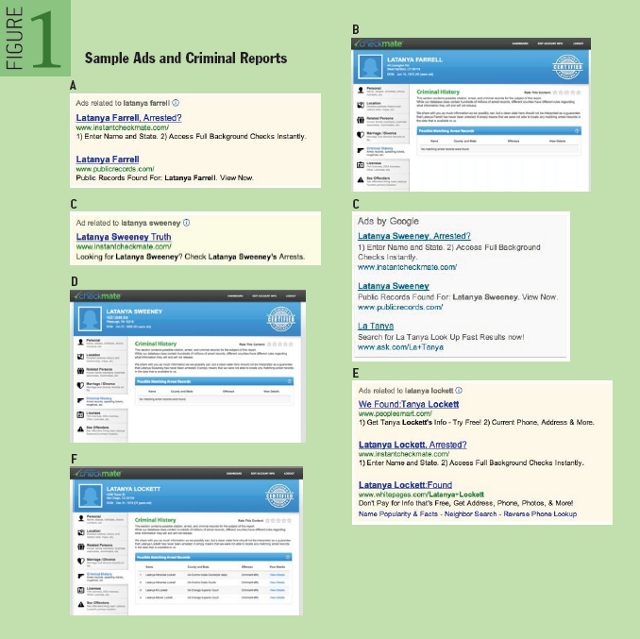

Professor Latanya Sweeney, a professor at Harvard University who studies how to solve political and social problems with technology, published a report in 2013 titled Discrimination in the Distribution of Online Advertisements . displayed an ad suggesting an arrest record . Professor Sweeney studied ads displayed when she searched for a person’s name on Google to see if there was racist behavior in Google’s display of ads. She first searched for three names containing “Latanya”: Latanya Farrell, Latanya Sweeney, and Latanya Lockett, which included the professor’s name (who is a black woman). Then, all the advertisements accompanying the searches of the three people were given the title “Does ○○ have an arrest record?” (A, C, E in the image below). The ad contained a link to instantcheckmate.com , which offers a service that allows individuals to check arrest records . Of the three people searched, only Latanya Lockett actually had an arrest record.

Next, when I Googled the names of three people, Kristen Haring, Kristen Sparrow, and Kristen Lindquist, no ads were displayed with links to instantcheckmate.com, even though all three of the people I searched had arrest records. did not. Furthermore, when I Googled the names of three people, Jill Foley, Jill Schneider, and Jill James, who had arrest records, I found an advertisement with a link to instantcheckmate.com, but “Does ○○ have an arrest record?” A message suggesting an arrest record such as “?” was not displayed.

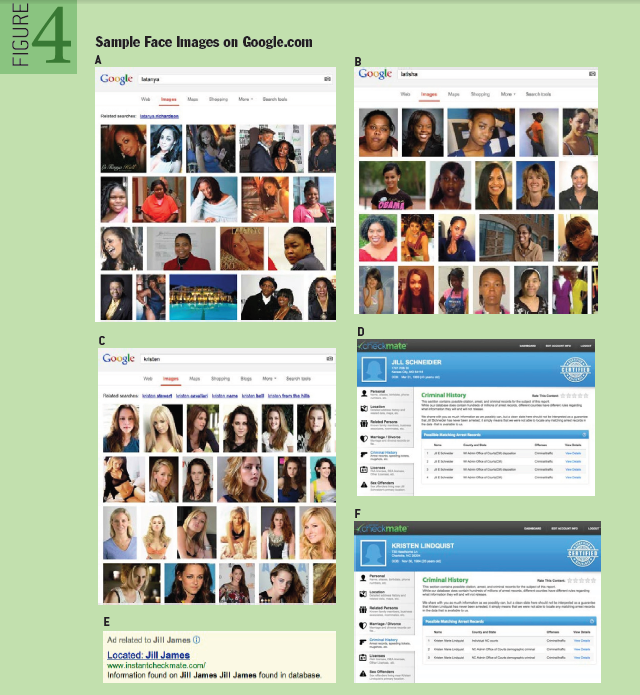

Finally, when I did a Google Image search with the four first names Latanya, Latisha, Kristen, and Jill, Latanya and Latisha showed more black images, while Kristen and Jill showed more white images ( See image below). This search result is evidence that the Google search algorithm recognizes Latanya and Latisha as black names, and Kristen and Jill as white names.

From the above experimental results, Google search displays advertisements suggesting arrest history when searching for a person’s name that is presumed to be a black name, while displaying such an advertisement when presumed to be a white name. The racist behavior of not displaying was recognized.

Four. Discrimination in Pricing

An article published in September 2015 by ProPublica, titled “ Tiger Mama Tax: Asian Neighborhoods Nearly Double Priced by Princeton Review, ” found that online tutoring service Princeton Review’s pricing of services is racially motivated. Reported allegations of discrimination. The company priced its services differently for each region. Under these circumstances, the price in areas with high Asian population density was 1.8 times higher than in other areas .

The Princeton Review explained that while there was no racial or ethnic discrimination in pricing, “optimizing prices by region resulted in the above results.”

Five. Racism in image search

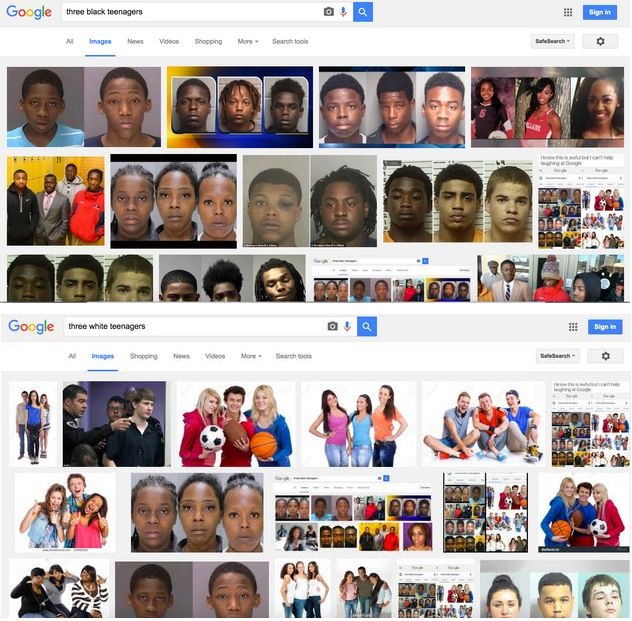

In a 2016 article published by the HuffPost in the UK, ” Three Black Teenagers : Is Google Racist? On the other hand, when I searched for Caucasian children, an image of three Caucasian children was displayed (see the image below. The upper image is the result of an image search for “three black teenagers”. The lower image is Image search results for “three white teenagers”).

In response to these search results, Google commented, “Offensive depictions of sensitive and important subjects online can affect image search results for specific queries,” reflecting the company’s opinions and beliefs. I also answered no.

6. Gender discrimination in translation tools

In a paper published in 2017 by Associate Professor Aylin Caliskan, currently affiliated with George Washington University in the United States, “Semantics automatically extracted from linguistic corpuses inevitably include human biases, ” describes gender differences . The associated linguistic bias is discussed. Specifically, if you use Google Translate to translate Turkish into English, “He is a doctor. I will do it. By the way, there is no gender for third person singular pronouns in Turkish. This example shows that the bias that “doctors are male and nurses are female” is mixed in during translation.

In addition, the problem of Google translation related to the above example was later fixed, and when the above Turkish phrase was translated into English, the translations for men and women were displayed side by side (see the image below. This fix. (For more information, see the Google AI blog About providing gender-specific translations in Google Translate .)

Six causes of “discrimination by AI”

The report by Professor Borgesius mentioned above also discusses the causes of discrimination by AI, and the following six items are listed. These six items include things that even AI system developers themselves find difficult to notice.

1. Improper definition of target variable

You define what to predict (technically called the target variable). For example, when developing an employee appraisal system, you have to define what constitutes a good employee. If “not late” is a factor of “good employees”, employees who are far from work may be unfairly underrated. Human discrimination and prejudice may be mixed in at the stage of defining the target variable.

2. Improper labeling of training data

Discrimination may be mixed in at the stage of labeling the training data . In the 1980s, a British medical school developed an app to organize application forms. The learning data given to this app was arranged in order from the application form with a career that was easy to enter medical school. In this training data, women and immigrants were placed at the bottom of the alignment. As such, the application alignment app was later found to be mistreating women and immigrants.

3. Discrimination inherent in training data

Discrimination may also be introduced when collecting training data . For example, if you collect data on crime incidence by region, you may find that areas with more immigrants have higher crime rates. However, in this case, the police may have concentrated on cracking down on immigrant residential areas, resulting in a higher number of crimes.

Four. Discrimination mixed in when selecting feature values

Discrimination may be mixed in at the stage of selecting features to be selected when developing a prediction system . For example, when developing a system for recruiting university graduates, let’s say that we chose the feature value of whether or not a person graduated from a famous university. In this case, there may be a racial bias in graduates from prestigious universities with high tuition fees. In that case, you may be unintentionally complicit in racism.

It is speculated that the discrimination in Amazon’s recruitment system mentioned in the case of discrimination is caused by this ‘discrimination by feature value’. If we assume that “data science graduates” are the characteristic quantity of excellent human resources, we may underestimate women who are considered to be a minority in the department at present.

Five. Discriminatory Substitution of Features

In some cases , certain features are correlated with different content than what should be explained , leading to discrimination. For example, research has been published that if an AI system is developed that reveals friend tendencies based on SNS friend relationships, this system can be diverted to a system that identifies homosexuals.

6. deliberate discrimination

There are also cases where AI systems are used for discriminatory purposes . For example, a system that predicts whether a person is pregnant or not based on shopping habits can be effectively used for marketing targeting pregnant women. However, this system can also be used to identify and dismiss pregnant female employees.

Initiatives around the world

Efforts to study the cases and causes of “discrimination by AI” and take countermeasures are spreading around the world as follows.

AI Now Institute

The AI Now Institute , founded in 2017 and based at New York University in the United States , focuses on four areas related to AI: “rights and freedoms,” “labor and automation,” “bias and inclusion,” and “safety and critical infrastructure.” I am doing research.

Meredith Whittaker, one of the co-founders of the institute, worked in Google’s AI research division. He realized that the current social implementation of AI led by giant tech companies would promote a bias that favors white men, and after protesting against Google, he left the company and joined I became devoted to the activities of the institute. You can read more about this in our Medium article, ” Let’s move forward! Another goodbye to Google Workouts” for more details.

The institute has posted numerous articles on Medium about discrimination caused by AI, most recently discussing discrimination related to different Apple Card spending limits for married couples . There is something like.

Montreal AI Ethics Institute

The Montreal AI Ethics Institute , founded in July 2017 in Montreal, Canada , also has the goal of “helping define the place of humanity in a world increasingly characterized and driven by algorithms.” Therefore, I am researching AI ethics.

In one of the blog posts on the Institute’s official website, ” AI in Finance: 8 Frequently Asked Questions ,” the biggest problem with discrimination by AI is believed to be less bias than human judgment. He argues that the mathematical method is the source of the bias, and that the problem itself is not well known in the first place.

Guidance issued by the European Commission

On April 8, 2019, the European Commission published ” Ethical Guidelines for Trustworthy AI “. The guidelines define trustworthy AI and also set requirements that such AI must meet.

According to the guidelines, “trustworthy AI” can be defined from the following three perspectives.

- Legal : complies with applicable laws

- Ethical : respects ethical principles

- Robustness : Considering the social environment from a technical point of view

Such trustworthy AI is also stipulated to meet the following seven requirements:

- Supervision by human agents : AI needs to be supervised by humans

- Secure : In case of technical problems, you can stop and reproduce the problem

- Privacy and Data Governance : Respect Privacy and Manage Data Properly

- Transparency : AI business models should be traceable to their mechanisms

- Diversity, Discrimination, and Equity : Diversity should be ensured while there is no discrimination

- Enhancing Happiness : AI Should Bring Social and Environmental Happiness

- Accountability : AI systems should be accountable

The European Commission also announced an evaluation list to verify whether the above requirements are met, and conducted an operational test of the evaluation list from June to December 2019.

As described above, AI ethics initiatives are being promoted mainly in AI-advanced countries. However, at present, each country seems to be at the stage of developing AI ethics.

What should the Japanese AI industry and society as a whole do about the problem of “discrimination by AI” that is being tackled around the world? In the following, the minimum measures to be taken are divided into three items.

1. Development of guidelines

It would be essential to have guidelines on “discrimination by AI”. It would be desirable for these guidelines to include the following:

- Examples of “discrimination by AI”

- Causes of “discrimination by AI”

- Case study on prevention and correction of “discrimination by AI”

- Precautions to be taken when developing an AI system to avoid causing discrimination

2. Establishment of inspection system

If AI makes a discriminatory decision, it is also necessary to establish know-how to correct that decision. Transparency of the AI system is essential for establishing such know-how . This is because we must be able to inspect the judgment process of AI systems in order to identify what causes discrimination.

AI system transparency must be ensured from two aspects: algorithms and training data . Regarding the transparency of algorithms , it can be expected that the spread of technology related to ” explainable AI ” , which is currently being researched, will be realized. We are releasing a beta version of a framework called ).

Regarding learning data, when discrimination by AI is suspected, it is necessary to secure the right to be inspected and to develop an inspection system. However, when disclosing learning data held by public institutions, it should be done in accordance with strict rules regarding the handling of personal information .

3. Dissemination of AI ethics education

AI ethics education for AI developers is also essential. There are two points of emphasis in this education. First, it is possible that the cause of discrimination by AI lies in the discriminatory consciousness of the developers, so it is necessary to reconfirm the ethics of the developers themselves . The other is to cultivate an attitude that does not regard judgments made by AI as absolute . If such a stance is firmly rooted, even if discrimination by AI occurs unexpectedly, it will be possible to decide to suspend the AI system without hesitation.

Attitude toward the spread of “trustworthy AI”

Machine learning and deep learning, which require learning data, are the most widely used AI technologies that are currently being implemented in society. As mentioned above, there are many cases where the cause of “discrimination by AI” is hidden in essential learning data. Therefore, as long as learning data is obtained from the real world, the possibility of AI repeating and even expanding discrimination in the real world cannot be denied.

The first step to prevent AI from repeating and expanding discrimination and prejudice is to return to the principle of “for what purpose is AI implemented?”

Of course, “trustworthy AI” must not make judgments based on discrimination or prejudice that hurt someone’s dignity. Therefore, if there are causes of discrimination or prejudice in training data, such causes should be actively removed or corrected . On the other hand, ignoring or even justifying discrimination by AI on the grounds that it is based on data obtained from the real world is an act that goes against society’s expectations of the spread of trustworthy AI.

As the social implementation of AI progresses further in the future, it is conceivable that discrimination by AI will occur unexpectedly. If we can return to the principles that “trustworthy AI” should meet when such an unexpected problem occurs, we can avoid erroneous responses such as ignoring or covering up discrimination.